Table of Contents

AI agents are showing up in more parts of the customer journey, from product discovery to checkout. And fraudsters are also putting them to work, often with alarming success. In response, cyberfraud prevention leader Darwinium is launching two AI-powered features, Beagle and Copilot, that simulate adversarial behavior and help security teams stay ahead of threats.

Announced just ahead of Black Hat USA 2025, these features are designed to give defenders their own AI capabilities. Beagle red-teams digital journeys with autonomous agents, while Copilot helps teams detect threats and fine-tune defenses using platform-native intelligence.

“Both your best customers and your biggest adversaries are now using agentic AI,” said Alisdair Faulkner, CEO and co-founder of Darwinium. “Solving that problem requires a platform that speaks the same language.”

The problem with agentic AI

The fraud landscape is shifting. Attackers no longer rely on simple bots with rigid scripts, they use AI agents that act like real users. These agents mimic human behavior, adapt to security controls, and learn from failed attempts.

“The challenge isn’t detecting automation anymore,” Faulkner noted. “It’s distinguishing good automation from bad.”

This creates a hard trade-off for companies in e-commerce, fintech, and banking. If you block AI agents outright, you risk frustrating customers and potentially missing out on business opportunities. But if you allow them without proper controls, you could open the door to large-scale fraud.

“We’re at an iPhone moment for AI,” Faulkner explained. “Ten years ago, people said they’d never enter credit card details into a phone. Today, 95 percent of purchases happen on mobile. AI agents will follow the same path.”

Beagle: Red-teaming with autonomous adversaries

Beagle simulates fraud attacks using autonomous agents that emulate real user behavior. It can navigate a full customer journey, from account creation to checkout, using spoofed devices, synthetic identities, and behaviorally accurate interaction patterns.

This is not a test sandbox or a rules check. Beagle actively probes for weak spots across a company’s live defenses, including biometrics, device fingerprinting, velocity checks, and bot scoring.

“The idea is to build both the attacker and the defender at the same time,” Faulkner explained. “That way, we can simulate and iterate faster than humans ever could.”

Beagle integrates with Darwinium’s platform and requires no deep customer integration. Teams simply define the use case they want to test, such as onboarding or login, and Beagle does the rest.

“You give it an objective and it produces a detailed audit,” Faulkner described. “What tools you had, what it bypassed, where friction occurred. It’s zero-integration, but high insight.”

Copilot: Helping fraud teams move faster

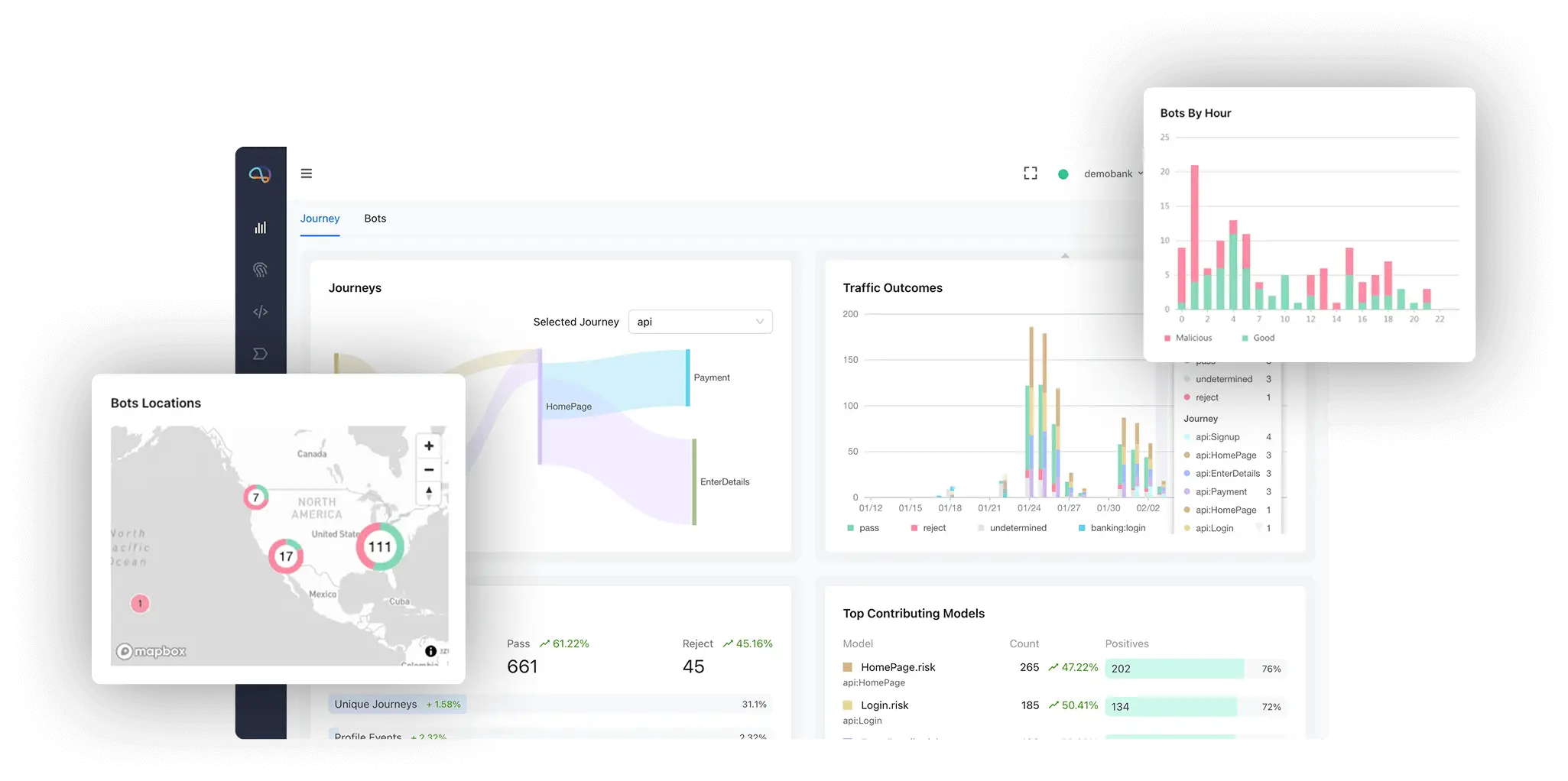

Copilot is a conversational assistant built into Darwinium’s platform. It helps fraud and security teams search behavioral data, generate detection rules, evaluate the impact of policy changes, and automate common tasks.

Copilot uses customer-specific data to suggest new features or detection logic. It can also estimate how different rules affect fraud loss and user friction, making it easier to balance protection and experience.

“We don’t believe in bolt-on AI,” Faulkner noted. “If AI isn’t built into the platform from the start, it’s just lipstick on a pig.”

Copilot can summarize complex risk events, interpret graphs and dashboards, or generate rules based on analyst prompts. It is designed to make security teams more productive without needing deep platform knowledge.

“There’s no one person in most organizations who understands fraud, security, and product risk end-to-end,” Faulkner said. “Copilot bridges that gap.”

Built for the edge, designed for real-time

Darwinium is deployed at the edge, integrated into CDNs like Cloudflare and Akamai. This allows it to analyze user behavior and intent as close to the user as possible, with minimal latency and no disruption to the experience.

The platform observes every step in the user journey, scoring risk before, during, and after login. This helps detect behavior that looks safe at first but turns malicious deeper in the session.

“You can’t rely on perfect authentication at login anymore,” Faulkner explained. “You need progressive trust. That means watching what users do across the full session to understand intent.”

Why this matters now

AI-generated fraud is already happening. Voice biometrics, selfie-based verification, and other digital ID checks are being targeted by AI tools that can now mimic human inputs with alarming precision.

“We’re heading toward a moment where AI will learn across a million websites a day how to get better at fraud,” Faulkner shared. “It won’t be a slow burn. When it hits, it will hit hard.”

For CISOs, the key takeaway is this: defending against fraud takes more than traditional rules or basic AI features. It calls for tools that can simulate attacks, understand intent, and continuously adapt.

Beagle and Copilot offer one approach to meeting that challenge. They are designed to give fraud and security teams the same kind of smart, scalable automation that attackers are already using.

To learn more or book a demo visit darwinium.com.